Understanding whether ARM Holdings is genuinely profiting from AI or riding a wave of market enthusiasm requires digging into specific product categories, capital deployment patterns, and actual revenue attribution. Many semiconductor companies claim AI exposure, but few can quantify how much of their business directly depends on AI workloads versus traditional computing applications. ARM's licensing model adds another layer of complexity since their revenue comes from intellectual property rather than chip sales.

Key takeaways

- ARM's AI revenue flows through licensing fees for Cortex-X and Neoverse processor designs optimized for machine learning workloads, not direct chip sales

- The company's total addressable market expanded when data center operators began deploying ARM-based servers for inference workloads, creating a new revenue stream beyond mobile devices

- Competitive positioning against Nvidia centers on different market segments: ARM targets edge AI and efficient inference while Nvidia dominates training and high-performance compute

- Royalty structures mean ARM's AI revenue scales with chip shipments by partners like Amazon, Google, and Microsoft rather than one-time licensing fees

- Evaluating ARM's AI business requires separating design wins and partnerships from actual deployed silicon generating royalty payments

How ARM generates revenue from AI products

ARM Holdings operates through a licensing model where they design processor architectures and license those designs to chip manufacturers. Companies pay upfront licensing fees to access ARM's intellectual property, then pay per-chip royalties when they manufacture and sell devices using those designs.

The AI revenue component comes from specific product lines optimized for machine learning tasks. The Cortex-X series targets smartphones and edge devices running on-device AI models. The Neoverse platform aims at data center applications where servers handle inference workloads. Each category generates both licensing fees when partners adopt the design and ongoing royalties as those partners ship chips.

Inference workload: The process of running a trained AI model to generate predictions or outputs, as opposed to training the model initially. Inference requires less computational power than training but happens far more frequently, making efficient chip design valuable for cost management.

What makes quantifying ARM artificial intelligence revenue difficult is that royalty payments lag design adoption by 12 to 24 months. A partnership announcement today means revenue recognition potentially years later once the partner's chips reach mass production. This timing gap creates a disconnect between headlines about AI design wins and actual financial impact.

ARM Holdings AI revenue versus traditional licensing

The bulk of ARM's business historically came from mobile device processors, particularly smartphones where their designs power the majority of devices globally. Traditional licensing deals with companies like Qualcomm and MediaTek generated steady royalty streams as billions of phones shipped annually.

AI-specific revenue represents a smaller but faster-growing segment. Data center operators building custom silicon for their cloud infrastructure create higher royalty rates per chip since server processors command premium pricing compared to mobile chips. Amazon's Graviton processors and Google's Axion chips both use ARM architecture specifically for cloud workloads including AI inference.

The strategic shift matters because server chips typically generate 3 to 5 times the royalty revenue per unit compared to smartphone processors. A relatively small number of data center deployments can meaningfully impact overall revenue if adoption reaches scale. The question becomes whether hyperscalers will deploy ARM-based AI infrastructure broadly or limit it to specific use cases.

What's ARM's competitive position against Nvidia in AI infrastructure?

ARM and Nvidia compete in different segments of the AI infrastructure market. Nvidia dominates GPU-accelerated training workloads where their CUDA software ecosystem and high-performance chips create significant switching costs. Most large language models and computer vision systems train on Nvidia hardware.

ARM targets the inference side where trained models run predictions at scale. Their advantage lies in power efficiency rather than raw compute performance. When a company needs to run millions of inferences per day across distributed systems, the energy cost per inference becomes a critical factor. ARM-based processors can handle many inference tasks at a fraction of the power consumption of GPU-based systems.

Intel represents a third competitive dynamic. Their x86 architecture still dominates data center installations, and they're adding AI-specific features to compete for inference workloads. ARM needs to convince data center operators to adopt a different instruction set architecture, which involves recompiling software and retraining operations teams. The switching cost creates friction even when technical specifications favor ARM designs.

How to evaluate ARM Holdings AI strategy claims

Start by separating announcements about design partnerships from evidence of volume production. A press release about a new AI chip design using ARM architecture means potential future revenue, not current income. Look for statements about tape-outs, production timelines, and expected shipment volumes.

Capital expenditure patterns from ARM's licensing partners provide indirect evidence. When Amazon, Google, or Microsoft increase spending on custom silicon development, that suggests they're moving beyond experimental deployments. You can track these investments through partner earnings calls and capital allocation discussions.

Royalty revenue growth rates offer the clearest signal. If ARM's data center royalties accelerate while mobile royalties remain flat, that indicates AI-related designs are reaching production scale. The company reports royalty revenue by end market, allowing investors to isolate infrastructure growth from mobile device trends.

Design win: When a chip manufacturer chooses to license a specific processor design for their product. Design wins precede actual revenue by significant time periods since manufacturing, testing, and commercial deployment take additional quarters or years.

Understanding ARM's AI revenue model complexity

The licensing business model creates revenue recognition challenges that complicate ARM Holdings AI revenue analysis. Companies pay an upfront fee to access the intellectual property, which ARM recognizes immediately. Royalty payments trickle in over years as partners manufacture and sell chips using those designs.

High upfront licensing fees can make early partnerships look financially significant even when ongoing royalties remain minimal. A $50 million licensing deal generates a nice revenue spike but tells you nothing about long-term royalty potential. Some partners pay large licensing fees then never reach volume production due to technical challenges or market shifts.

Royalty rates vary significantly based on the processor complexity and target market. Basic Cortex-M microcontrollers for embedded applications might generate pennies per chip while high-performance Neoverse processors for data centers could generate several dollars per unit. ARM typically doesn't disclose specific royalty rates, so investors must estimate based on statements about revenue per chip across different product categories.

Where ARM Holdings fits in the AI infrastructure stack

AI infrastructure consists of multiple layers from silicon through software frameworks to application interfaces. ARM sits at the silicon layer providing the fundamental processor designs that handle computation. Their success depends partly on software ecosystem development since developers need tools to efficiently deploy AI models on ARM-based hardware.

The company doesn't control the full stack like Nvidia does with their integrated hardware and CUDA software platform. ARM provides the processor design while partners like Amazon and Google build the actual chips and develop their own software tools. This distributed approach means ARM captures a smaller portion of the total value but avoids the capital intensity of chip manufacturing.

Edge AI applications represent another strategic focus where ARM's power efficiency matters more than absolute performance. Running AI models on smartphones, IoT devices, or automotive systems requires processors that deliver sufficient performance within strict power budgets. ARM's Cortex-M and Cortex-A series target these applications, creating AI revenue opportunities beyond data center infrastructure.

How to assess ARM artificial intelligence growth potential

Evaluate the total addressable market expansion rather than just ARM's market share claims. The shift toward distributed AI inference creates opportunities for ARM-based processors in edge devices, smartphones, and data centers. If inference workloads grow faster than training workloads, that favors ARM's efficiency-focused designs over Nvidia's performance-optimized GPUs.

Partnership depth matters more than partnership quantity. Five hyperscale customers deploying ARM-based AI infrastructure at scale generates more revenue than fifty smaller design wins that never reach volume production. Track which partners are moving from evaluation to production deployment and what workloads they're targeting.

Software ecosystem maturity provides a leading indicator for adoption potential. Developers need mature frameworks, libraries, and tools to deploy AI models efficiently on ARM hardware. Monitor adoption of frameworks like TensorFlow Lite, PyTorch Mobile, and ONNX Runtime on ARM platforms as a proxy for future deployment growth.

Competitive responses signal market validation. If Intel and AMD aggressively enhance their AI inference capabilities in response to ARM's data center push, that suggests ARM is gaining meaningful traction. Conversely, if competitors ignore ARM's AI positioning, that might indicate limited market impact.

Try it yourself

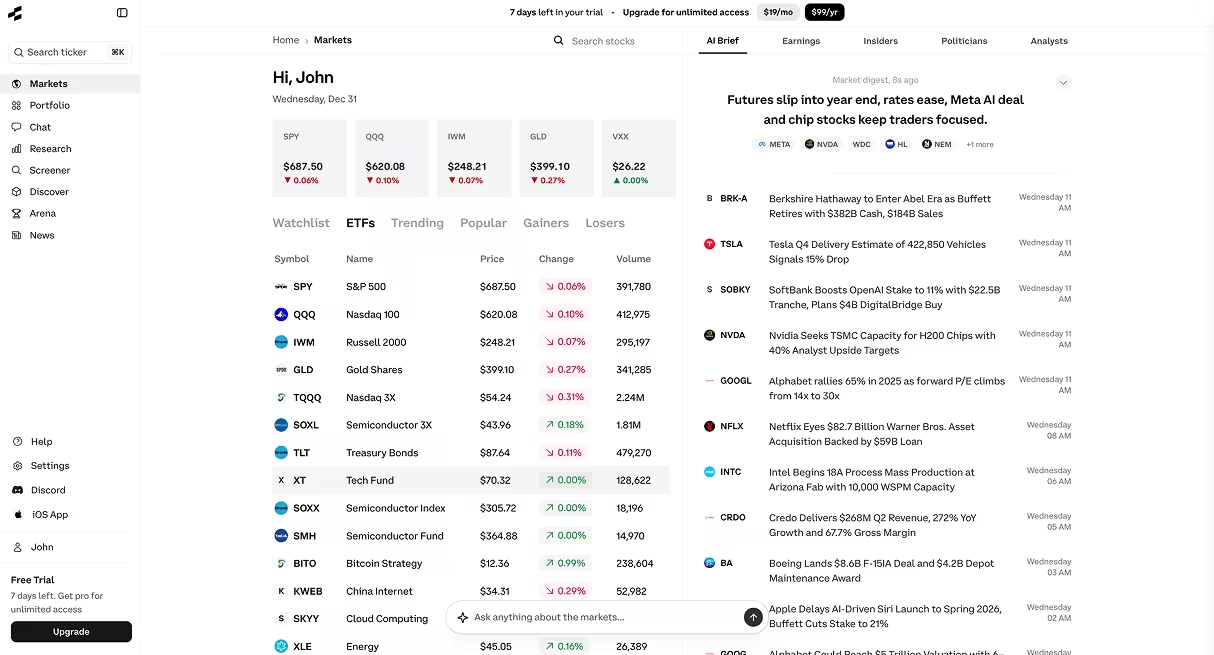

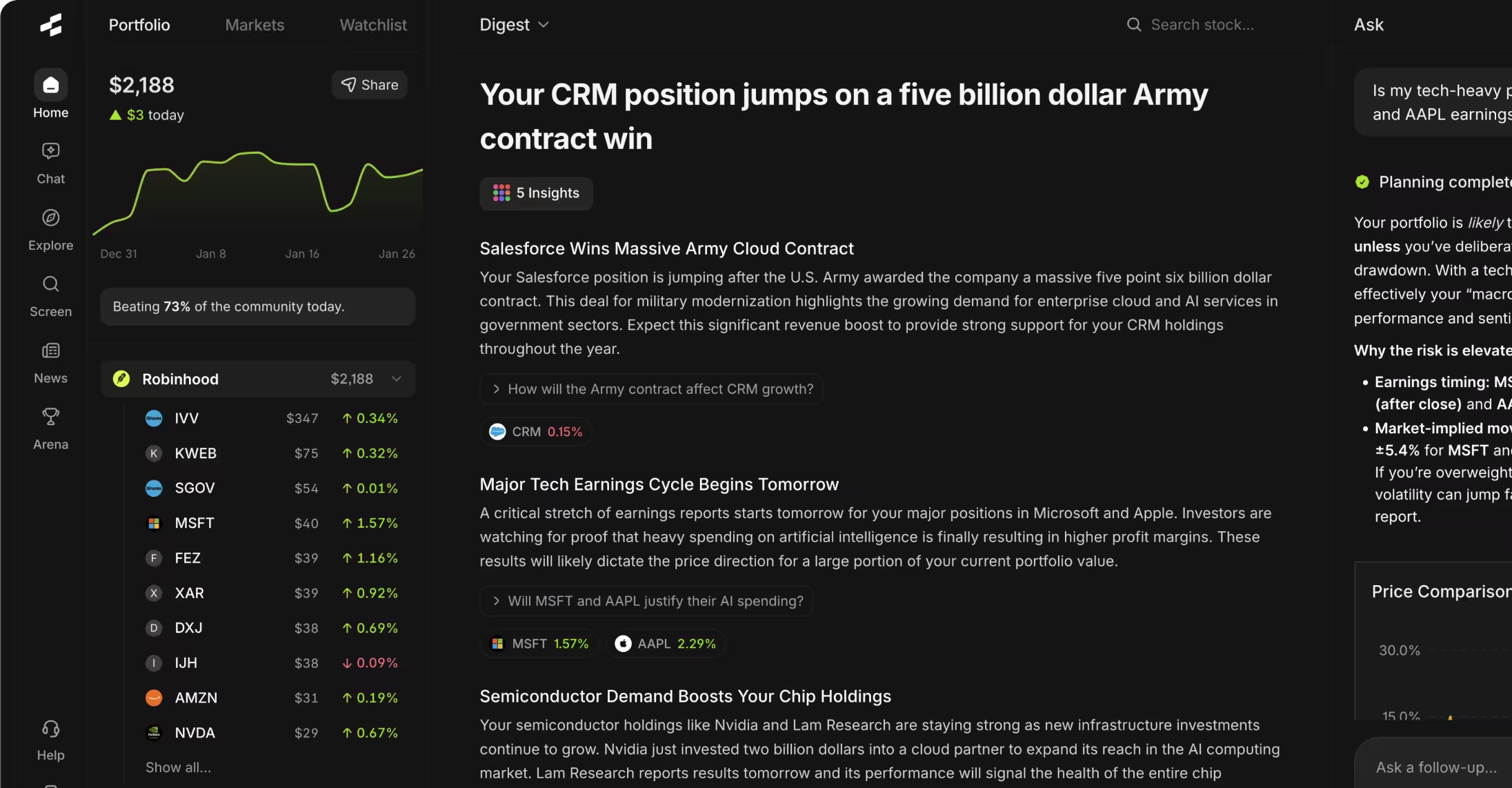

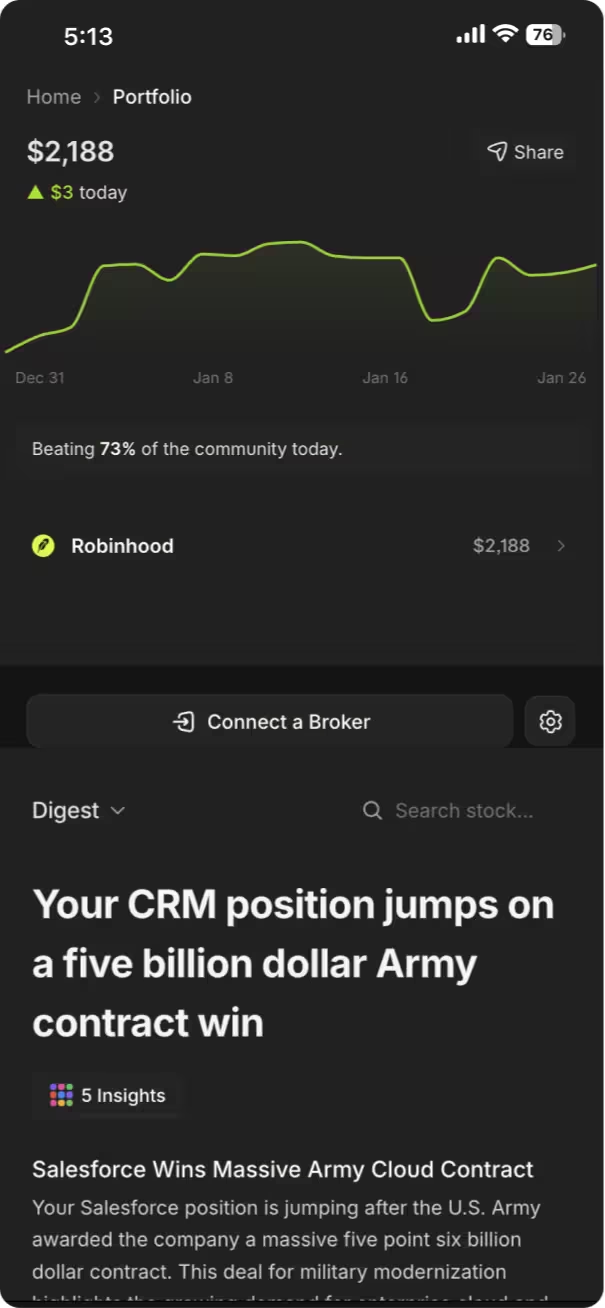

Want to run this kind of analysis on your own? Copy any of these prompts and paste them into the Rallies AI Research Assistant:

- I want to understand ARM Holdings' AI strategy beyond the hype — how much revenue are they actually generating from AI-related products, how does that compare to their traditional chip licensing business, and what's their competitive position against Nvidia and Intel in AI infrastructure?

- What's ARM Holdings's AI strategy? Are they actually making money from AI, or is it mostly future promises?

- Show me ARM's royalty revenue breakdown by end market over the past eight quarters and highlight which segments are accelerating — I want to identify whether data center growth is offsetting any mobile slowdown and what that implies for their AI revenue trajectory.

Frequently asked questions

How much of ARM Holdings revenue comes from AI?

ARM doesn't break out AI-specific revenue as a separate line item in their financial reporting. Their royalty revenue flows from multiple end markets including mobile, automotive, IoT, and data center infrastructure. AI-related revenue appears primarily in the data center and mobile categories, but these segments also include non-AI workloads. Investors need to estimate AI contribution based on statements about royalty rates, design win announcements, and growth rates in relevant end markets rather than direct disclosure.

Does ARM compete directly with Nvidia for AI chips?

ARM and Nvidia target different parts of the AI workload spectrum. Nvidia dominates training applications where their GPUs excel at parallel processing for model development. ARM focuses on inference workloads where their power-efficient processor designs handle predictions at scale. The companies become more competitive in edge AI scenarios where devices need to run models locally, but even there they often complement each other with Nvidia providing training infrastructure and ARM powering the edge devices running inference.

What are ARM Holdings AI products?

ARM's AI product portfolio includes the Cortex-X series for high-performance mobile and edge AI, the Cortex-A series for mainstream devices, and the Neoverse platform for data center infrastructure. These are processor designs rather than physical chips. ARM also offers the Ethos neural processing unit designs specifically optimized for AI workloads and the Mali GPU designs that include machine learning acceleration features. Each product targets specific performance and power efficiency requirements across different deployment scenarios.

How does ARM's licensing model work for AI revenue?

Companies pay ARM an upfront licensing fee to access processor designs, then pay per-chip royalties when they manufacture devices using those designs. For AI applications, this means a cloud provider licensing a Neoverse design pays an initial fee then pays royalties on each server chip they produce. The royalty rate scales with the chip's value, so high-performance data center processors generate larger per-unit payments than simpler embedded processors. This creates a lag between design announcements and revenue recognition since manufacturing and deployment take time.

Can ARM Holdings challenge Intel in data centers with AI workloads?

ARM's data center opportunity centers on specific workloads where their efficiency advantages justify the switching costs from x86 architecture. Cloud providers building custom silicon for their infrastructure have successfully deployed ARM-based servers for certain applications. The challenge involves convincing enterprises running third-party software to adopt ARM-based servers, which requires application compatibility and ecosystem maturity. AI inference workloads represent one area where the efficiency benefits are compelling enough that major cloud providers have invested in ARM-based solutions despite the architectural change.

What's the difference between ARM AI revenue and reported AI design wins?

Design wins represent agreements where partners choose ARM's technology for upcoming products. These generate upfront licensing fees but don't create significant ongoing revenue until those products reach volume manufacturing. A design win announced today might not generate substantial royalty revenue for 18 to 36 months depending on the partner's development and production timeline. This timing difference means design win announcements signal future revenue potential rather than current financial impact, making it important to track both new partnerships and production status of previously announced designs.

How do ARM's AI capabilities compare to custom AI accelerators?

ARM provides general-purpose processor designs with AI-optimized features rather than dedicated AI accelerators like Google's TPUs or specialized inference chips. This gives ARM-based chips flexibility to handle diverse workloads but potentially lower peak performance on specific AI tasks compared to purpose-built accelerators. The tradeoff depends on the use case since general-purpose processors make sense for applications running varied workloads while dedicated accelerators optimize for specific model architectures. Many systems use both approaches with ARM-based processors handling general tasks and accelerators tackling specific AI operations.

What metrics indicate whether ARM's AI strategy is succeeding?

Track royalty revenue growth in the infrastructure segment since that captures data center AI deployments. Watch for increases in average royalty per chip which would indicate partners are adopting higher-value designs like Neoverse rather than simpler architectures. Monitor design win announcements followed by production timeline updates to gauge conversion from partnership to revenue. Compare ARM's data center royalty growth against overall data center chip market growth to assess market share trends. Finally, observe whether major cloud providers expand their ARM-based deployments beyond initial pilot programs into broader infrastructure rollouts.

Bottom line

ARM Holdings AI strategy centers on power-efficient processor designs for inference workloads rather than competing with Nvidia in training applications. The licensing model creates timing lags between partnership announcements and actual revenue recognition, making it essential to distinguish between design wins and production deployments. Evaluating their AI business requires tracking royalty revenue trends by end market, assessing partnership depth with major cloud providers, and understanding how their efficiency advantages translate into meaningful deployments.

For investors researching semiconductor companies and AI infrastructure opportunities, the AI investing landscape extends beyond obvious GPU manufacturers into architecture licensing and edge computing applications. Use the stock screener to compare ARM against other semiconductor companies based on growth metrics and market positioning, or explore the ARM stock page for detailed financial analysis and competitive context.

Disclaimer: This article is for educational and informational purposes only. It does not constitute investment advice, financial advice, trading advice, or any other type of advice. Rallies.ai does not recommend that any security, portfolio of securities, transaction, or investment strategy is suitable for any specific person. All investments involve risk, including the possible loss of principal. Past performance does not guarantee future results. Before making any investment decision, consult with a qualified financial advisor and conduct your own research.

Written by Gav Blaxberg, CEO of WOLF Financial and Co-Founder of Rallies.ai.